반응형

강화학습 공부를 시작했다.

리처드 S. 서튼 과 앤드류 바르토의 레전드 강화학습 입문서<Reinforcement Learning - Introduction> 원서를 읽고 있다. 글 쓰는 현재 시각 기준으로 인용수가 무려 83,785에 육박한다....

본 포스팅은 1장의 주요 하이라이트 문장을 따로 발췌하여 정리한 글이다. (한글 해석x)

1.3. Elements of Reinforcement Learning

- A policy defines the learning agent's way of behaving at a given time.

- The policy is the core of a reinforcement learning agent in the sense that it alone is sufficient to determine behavior.

- The reward signal is the primary basis for altering the policy.

- Whereas the reward signal indicates what is good in an immediate sense, a value function specifies what is good in the long run.

- Roughly speaking, the value of a state is the total amount of reward an agent can expect to accumulate over the future, starting from that state.

- Whereas rewards determine the immediate, intrinsic desirability of environmental state, values indicate the long-term desirability of states after taking into account the states that are likely to follow and the rewards are available.

- Rewards are in a sense primary, whereas values, as predictions of rewards, are secondary. Without rewards there could be no values, and the only purpose of estimating value is to achieve more reward.

- Action choices are made based on value judgements.

- We seek actions that bring about states of highest value, not highest reward, because these actions obtain the greatest amount of reward for us over the long run.

- Rewards are basically given directly by the environment, but values must be estimated and re-estimated from the sequneces of observations an agent makes over its entire lifetime.

- A model of the environment - this is something that mimics the behavior of the environment, or more generally, that allows inferences to be made about how the environment will behave.

- For example, given a state and action, the model might predict the resultant next state and next reward. Models are used for planning, by which we mean any way of deciding on a course of action by considering possible future situations before they are actually experienced.

- Modern reinforcement learning spans the spectrum from low-level, trial-and-error learning to high-level, deliberative planning.

1.5. AN EXTENDED EXAMPLE: TIC-TAC-TOE

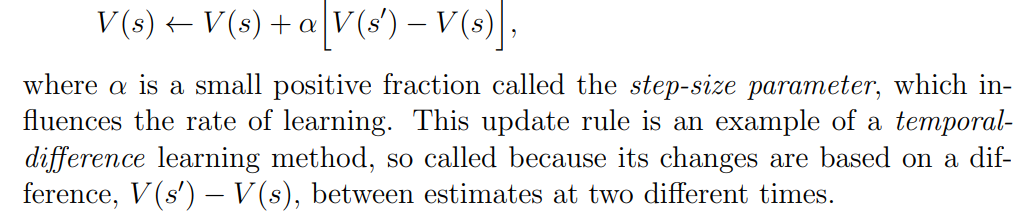

- More precisely, the current value of the earlier state is adjusted to be closer to the value of the later state. This can be done by moving the earlier state’s value a fraction of the way toward the value of the later state.

- If we let s denote the state before the greedy move, and s' the state after the move, then the update to the estimated value of s, denoted V (s), can be written as

- The artificial neural network provides the program with the ability to generalize from its experience, so that in new states it selects moves based on information saved from similar states faced in the past, as determined by the network.

- How well a reinforcement learning system can work in problems with such large state sets is intimately tied to how appropriately it can generalize from past experience.

- Because models have to be reasonably accurate to be useful, model-free methods can have advantages over more complex methods when the real bottleneck in solving a problem is the difficulty of constructing a sufficiently accurate environment model.

1.6. Summary

- Reinforcement learning is a computational approach to understanding and automating goal-directed learning and decision-making.

- It is distinguished from other computational approaches by its emphasis on learning by an agent from direct interaction with its environment, without relying on exemplary supervision or complete models of the environment.

- Reinforcement learning uses a formal framework defining the interaction between a learning agent and its environment in terms of states, actions, and rewards.

- The concepts of value and value functions are the key features of most of the reinforcement learning methods that we consider in this book.

1.7. History of Reinforcement Learning

- In this book, we consider all of the work in optimal control also to be, in a sense, work in reinforcement learning. We define a reinforcement learning method as any effective way of solving reinforcement learning problems, and it is now clear that these problems are closely related to optimal control problems, particularly stochastic optimal control problems such as those formulated as MDPs.

- Reinforcement is the strengthening of a pattern of behavior as a result of an animal receiving a stimulus—a reinforcer—in an appropriate temporal relationship with another stimulus or with a response.

- Particularly influential was Minsky’s paper “Steps Toward Artificial Intelligence” (Minsky, 1961), which discussed several issues relevant to reinforcement learning, including what he called the credit assignment problem: How do you distribute credit for success among the many decisions that may have been involved in producing it? All of the methods we discuss in this book are, in a sense, directed toward solving this problem.

- Widrow, Gupta, and Maitra (1973) modified the Least-Mean Square (LMS) algorithm of Widrow and Hoff (1960) to produce a reinforcement learning rule that could learn from success and failure signals instead of from training examples. They called this form of learning “selective bootstrap adaptation” and described it as “learning with a critic” instead of “learning with a teacher.” They analyzed this rule and showed how it could learn to play blackjack. This was an isolated foray into reinforcement learning by Widrow, whose contributions to supervised learning were much more influential. Our use of the term “critic” is derived from Widrow, Gupta, and Maitra’s paper.

반응형